Why this matters for social media

One of the most nauseating phrases ever uttered is “If you love something, set it free… If it comes back, it’s yours, if it doesn’t, it never was yours.” I am not sure to whom to attribute this, but I apologize in advance for even bringing it up.

But when it comes to social media, it matters. A lot. Set it free.

I am in the middle of AGBeat columnist Maddie Grant and Jamie Notter’s book, “Humanize” in which, early on, they make the case that upper-level management in organizations need to understand that participating meaningfully in social media – and being authentic and human – means letting go of the message. You can’t control it anymore, and if you are a large organization, the discussion is already taking place, like the U2 song, With or Without You.

It used to be that the way that large and medium-sized organizations got their messages out was through a one-to-many communications method, most of the time a press release. The intended effect was to create an inverted funnel in which the organization would control the message, the timing as well as the reaction. Gone, gone, gone.

Today’s communications environment

I like to compare today’s communications environment (heavily influenced by social media) as like being in the middle of a tornado. The discussions, debates, arguments and the like are swirling around you. They are taking place. Through being authentic and “human” (thanks Maddie and Jaime), organizations can hope to (at times) participate in the wind and sometimes even slightly redirect it, but you can’t stop a tornado.

When major corporations attempt to violate the spirit of social media – being authentic, listening and participating in conversations with customers or other stakeholders – bad things happen.

What bad things happen?

Last week, I was listening to my favorite podcast, “For Immediate Release” during which the hosts, Shel Holtz and Neville Hobson, discussed a Google+ comment by Scott Monty, the head of social media at Ford Motor Company. Scott’s Google+ post (which as I write this, is no longer available at its original link) read:

“Shel & Neville – not sure if you guys have covered this on the show, but what are your thoughts on companies posting their own Terms of Use on Facebook? I noticed this one because someone called out that National doesn’t allow UGC [user generated content] that criticizes them. Our own legal department is concerned, because FB’s TOS are designed to protect FB, not brands.”

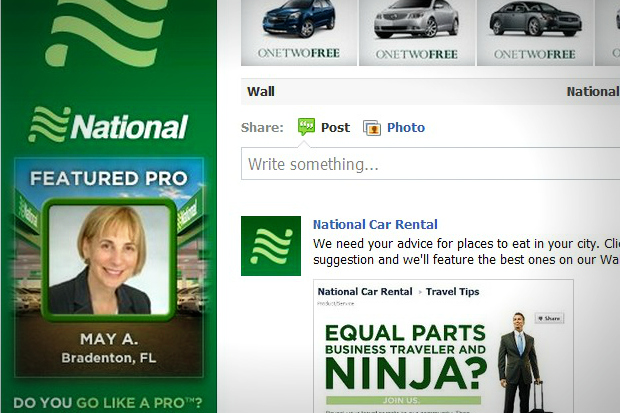

Shel and Neville went on to discuss that purportedly, National Car Rental was deleting Facebook Wall posts that were negative towards the company. This is a serious social media infraction. It violates the spirit of creating conversation and ruins and opportunity to engage with customers and offer the 33,638 people that have “liked” the company a front-row view of an organization that is open, honest and willing to take on problems. Note: I have no third-party confirmation of National doing this, but I did find this in their terms of on the Facebook page:

You may not post any User Content that:

- Infringes any person’s legal rights, including any right of privacy and publicity

- Is defamatory, infringing, abusive, obscene, indecent, deceptive, threatening, harassing, misleading or unlawful;

- Contains any code, application, software or material protected by intellectual property laws or any malicious code including any programs that may damage the operation of another person’s computer or which contains any other form of virus or malware;

- Disparages, slanders, criticizes, or maligns National;

- Is commercial in nature and advertises any product, service, or good other than National, unless you have obtained National’s prior consent.

- You will not rely upon any claim or statement made, or anything contained in any User Content. This Facebook Fan Page is for entertainment only. It is not an authorized source of information about National or our brands, vehicles, or services. If you are looking for that type of information, please visit our website at www.nationalcar.com.

The bullet point “disparages, slanders, criticizes, or maligns National” is the one that caught my attention. What if you get a National car that is a clunker, you are on your way to an important meeting and the car dies? Does that mean that you cannot, in a public way on a platform set up by National, “criticize” the company?

If you can “like” them, why can’t you, publicly, “dislike” them? If this is the case, I am wondering why National even bothers having a Wall if it is pre-ordained that everything will be sunshine and chocolate, and if not, potentially removed.

The takeaway:

My final point: Scott Monty is well known and well respected as the head of social media at Ford. National Car Rental rents Ford cars. When I went to look for the original Google+ post (I found what I have posted above on the FIR Google+ account), the comment had been removed. It could well be a technical glitch. I hope so.

I believe that Scott truly gets social media, but I sincerely hope that his comment was not censored by National or Ford for “disparaging, slandering, criticizing, or maligning National.”

So if you love social media, if you expect to listen, participate, be authentic and human as well as respond to consumer complaints on a platform that your company set up, National Car Rental, if you love Facebook, set it free.

Mark Story is the Director of New Media for the U.S. Securities and Exchange Commission in Washington, DC. He has worked in the social media space for more than 15 years for global public relations firms, most recently, Fleishman-Hillard. Mark has also served as adjunct faculty at Georgetown University and the University of Maryland. Mark is currently writing a book, "Starting a Career in Social Media" due to be published in 2012.