Letting AI run an actual company

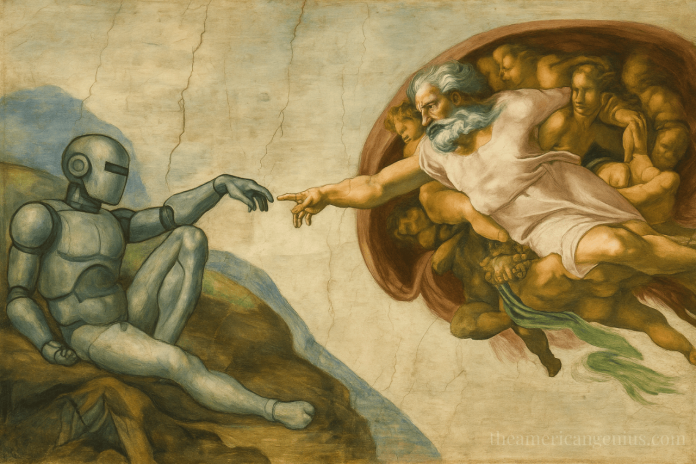

Picture this: You’re sitting in a college lab with a bunch of researchers, nerding out about this cool idea. “What if we made an all-AI software startup with AI agents performing all of the tasks?” You start tossing ideas back and forth and realize it wouldn’t actually be all that hard – but would it work? Artificial intelligence (AI) can automate the hell out of a calendar, but can it run an entire business?

That’s exactly what happened at Carnegie Mellon University. The first step in their creating “TheAgentCompany” was adding artificial workers (bots) from OpenAI, Meta, Google, and Anthropic to be software engineers, financial analysts, and project managers to work with simulated coworkers like a fake Chief Technical Officer (CTO), and a fake HR department they could refer to for help. The autonomous agents could use the internet for information, refer to an employee handbook, internal websites, and a Slack-like chat program, as well as write code, organize info into spreadsheets, and even communicate with “coworkers.”

So what happened when they hit the “let’s do this” button? It failed. Almost comically. The AI agents excelled at having meetings (a LOT of meetings), and had a lot of internal conflicts, and ended up with no product. It was essentially a parody of a stereotype of a comedy show about startups, all gloriously powered by AI itself. It’s worth reading about if you want the full story before we dive into why it’s more than just hilarious.

So this is what happens when you put AI agents in charge of the whiteboard without giving anyone a damn dry erase marker.

This failure wasn’t just predictable – it was inevitable

Artificial intelligence, especially Large Language Models (LLMs) are mind-blowingly phenomenal at matching patterns, but they’re not inventors. They’re still limited by their programming, and as they say – garbage in, garbage out.

But we’re no longer strictly at the garbage in, garbage out phase, are we?

If we’re being honest with ourselves, that ended when we graduated from simple website chatbots where you’d load your FAQ documents and allow people to have “human” sounding conversations with a bot based on that limited data set you’d fed it.

Today, AI is incredible at mimicry, synthesis, and analysis. But it struggles with originality, creation, nuance, and even judgment. It also struggles with prioritization in ambiguous, high-stakes environments as the Carnegie Mellon researchers discovered first hand.

This isn’t the first time in recent memory that we’ve collectively hoped that a technology would solve countless problems and propel us into the future. Think back to the metaverse, NFTs, and even blockchain technologies – all incredible (and highly hyped) technological advents, but the public mistook these tools for strategies. Just as most of us are doing with AI in this historic moment.

Where AI actually shines (since it can’t run a business yet)

I’m not here to dog on AI, in fact I use it extensively in my daily life now, and I am enamored with much of what it can do. I love Midjourney for image creation and I use it a lot (too much). Sora is entertaining to create interesting videos with.

But the stakes are low and the consequences of failure don’t exist.

AI is an incredible research assistant, it can do market analysis, code review, content summaries, and customer sentiment breakdown like no one’s business. It’s a huge time saver. But it isn’t a co-founder. Yet.

What we must all understand is that AI isn’t replacing humans, it’s augmenting smarter human leadership. We’re in the middle of an evolution at a pace we’ve never experienced before and we’re feeling it out together.

But it’s all still novel, so we keep handing over our vision to algorithms that can’t see, we laugh at AI agents failing an experiment, and we lose the plot.

So why do we keep falling for the illusion of agency?

First and foremost, we do what humans always do – we anthropomorphize. We want AI to be a magical wizard genie and solve our problems. But we also want it to be a peer. We anthropomorphize our pets, why wouldn’t we do it with AI and tell it please and thank you (”just in case it becomes sentient,” but really because it feels unnatural not to assign human features when typing to something that seemingly types back to us).

Meanwhile, we continue to hear the drumbeat of “AI is going to take your job.” And while it will absolutely, without a doubt, nix some jobs, it will create others (but try telling that to someone who has already lost their job to a bot and is on month 9 of being unemployed). Growing pains are painful – this evolution is not without figurative fatalities. But AI itself doesn’t have sentience, it’s not “coming” for your job, it’s just a fugn algorithm.

The Carnegie Mellon experiment proves something extremely important to us – just because you label something a CTO, that doesn’t mean it knows how to ship product-market fit, and just because you call something HR, that doesn’t mean it can navigate the nuances of office politics while keeping multiple stakeholders in a pre-designed balance while building “culture.”

Let’s pause to address ethical grey zones of AI

What happens when people inevitably try to automate founders instead of workers? Carnegie Mellon was just the start – you’re tweakin’ if you think that was the end. Many more will read about it and think “but I can make it work better” and get to experimenting, mark my words. This is when we enter labor displacement theater.

We have to ask ourselves – are these experiments just trying to shortcut genuine effort? The answer is hell yes. If I can nix 10 of my least favorite tasks by tomorrow and pay a few cents, as a small business operator, I absolutely will, especially if there’s no learning curve and it’s a “set it and forget it” tool. Wouldn’t you? Don’t lie. In this moment, we have to consider value extraction versus value creation. And quickly.

A final ethical grey area I want to make sure we touch on is the ethics of using AI to simulate human labor under the guise of “experimentation.” At what point does academic voyeurism and research become exploration creep into inappropriate human labor replication?

The takeaway for decision makers and founders

AI is amazing, no doubt, but don’t get starry eyed quite yet – treat it like a tool, not a co-founder. Continue to think independently and critically. Ask yourself “what is this AI tool optimizing for?”

Look at your processes right now and think through who is responsible for AI oversight? What policies do you have in place already (if any) and what should you immediately roll out?

More importantly, what blind spots are we baking into our own workflows by tapping into AI in its current form?

AI is impressive at accelerating processes, not replacing people who understand context, strategy, or more importantly – consequences.

AI agents may have failed to build an actual startup because they don’t understand the human aspects of business – clarity, ambition, fiduciary responsibility, compromise, and navigating friction. We should continue to experiment, but thoughtfully. Leadership still requires actual human meat bags. For now.