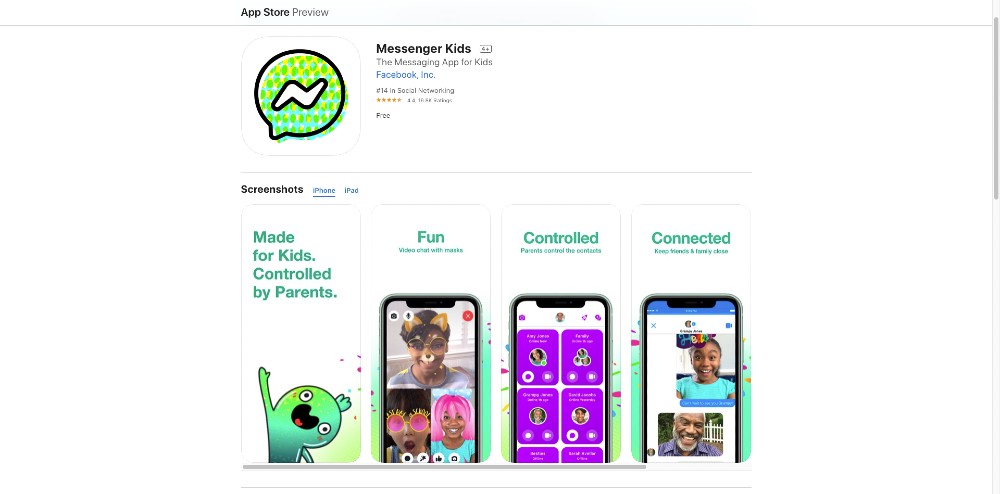

Facebook is attracting a whole new surge of users, with children attending school online around the world. Facebook use is booming, and people want to keep their children safe. Because of this, Facebook is expanding their Messenger Kids app to more than 70 countries.

They are also trying to make using the app simpler while simultaneously giving increased control over safety measures to the children’s guardians (here referred to as parents/parental controls, as in the app).

Messenger Kids has been around in the U.S. since 2017, and expanded into Canada and Peru in 2018. However, this new rollout makes Messenger Kids available in more than 70 countries, introducing various features in waves. Brazil, India, Japan, and New Zealand are among the new countries with access to Messenger Kids.

Facebook also appears to be making the platform as safe as possible, adding features to keep children safer. Messenger Kids recently had a flaw that allowed children to start group chats without parental knowledge. Thus, Facebook needed to beef up security measures before expanding their children’s platform.

Here’s a look at the three new safety features on Facebook’s Messenger Kids app.

- Supervised friending: Parents have an option that allows their children to accept, reject, add, or remove friends. The parents are notified, though, so they can review and remove or block any friends they want to. The parents have this control though a Parent Dashboard. Previously, parents had to approve any friend requests themselves directly in their child’s account.

- Another feature geared for online schooling allows a parent or designated adult to start and invite their children into a group chat. Think teacher, coach, or school director or principal. This allows class or team discussions to proceed online without delay.

- The other new feature Messenger Kids is unrolling is allowing the child’s photo and profile name to be visible to a select group within the child and their parents’ network, extending to friends of friends, though only with parental permission and only within North America, Central America, and South America.

When deciding which features to add to the Messenger Kids app, Facebook consulted their Youth Advisors. This group, according to Facebook, is “a team of experts in online safety, child development and media…including Safer Internet Day creator Janice Richardson and Agent of Change Foundation chairman Wayne Chau.”

Most adults who allow their kids to use electronic devices with internet access realize that kids are curious, resourceful, and often better at tech than they are. It’s good to see giant communication entities like Facebook working to enhance safety measures for children. Connecting to friends, teachers, classmates, and educational resources is a beautiful thing.

Yet we’ve learned to be wary of Facebook and their aggressive data collection. They must strive to ensure use of their platform isn’t a free fall for the vulnerable into dangerous waters.

Joleen Jernigan is an ever-curious writer, grammar nerd, and social media strategist with a background in training, education, and educational publishing. A native Texan, Joleen has traveled extensively, worked in six countries, and holds an MA in Teaching English as a Second Language. She lives in Austin and constantly seeks out the best the city has to offer.