Private social media updates made public through Storify

Julie Pippert, Founder and Director of Artful Media Group, known speaker and communications expert shared with AGBeat how she discovered what she believes to be a flaw in the popular service, Storify, making selected private Facebook status updates from personal profiles, private and secret groups visible to anyone and completely public.

Storify is a free content curation tool wherein users can pull social elements like photos, videos, and status updates from social networks, combining them into one single embeddable widget that is perfect for bloggers and digital publishers, telling the story of an event in its entirety through social reactions. It’s a clever and popular service that brags, “streams flow, but stories last.”

Unfortunately, that has been proven true of private Facebook status updates, no matter a user’s privacy settings, as using the Storify app to grab updates immediately pulls not only the quote from the status update, but the user’s profile picture which is linked to their account, the timestamp of the original status update on Facebook, and the original link.

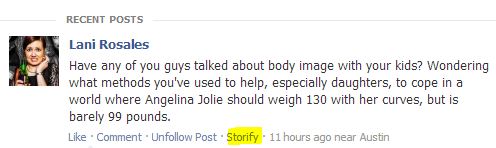

Below is what a user with the Storify Google Chrome Extension sees on an update I posted in a Secret Facebook Group (note the word “Storify” which is the mechanism that immediately pulls all of the aforementioned data into Storify):

When published in Storify, it appears like so (embedded using the Storify code provided by the service):

This is an example taken from a Secret Facebook Group comprised of a handful of very close friends, where we talk about sensitive health issues each of us have, which would obviously be detrimental for the public to see.

Now, if you are not a member of the secret group, you cannot see anything else inside of the group or who the members are, you do not have additional access to other status updates, but my face and name are now associated with a sensitive topic that the public can see, should another group member have innocently pulled the update as they saw it in their timeline, not realizing it was from the group, or simply not thinking Storify would authorize such a move.

Storify users can only pull status updates from people they are connected with socially, but their privacy settings matter not, and they can pull in status updates from private groups to which they belong, and while none of this offers a window into those users’ accounts or into the secret groups, the Storify tool can turn private Facebook updates public, even if only one at a time.

The discovery of the ability to bypass privacy settings

Pippert discovered this bypass through what she calls a “faux pas accident” by using the Storify app, sharing a friend’s Facebook update who felt her privacy settings were as private as they could possibly get, surprising both women at how easily a private account could become public, even if it was only one status update.

“I felt so terrible about what happened that I started digging and checking,” Pippert said, “and I figured out that although anything can be copied, screen captured or otherwise shared, anyone who installs the Storify app can do it with one click, even if it is marked or otherwise set to be private.”

Pippert explains that she shared a friend’s update about Superstorm Sandy which was very heartwarming, but when she notified her friend, both were alarmed that it could be used publicly, and no matter the content, her friend did not want her name used publicly, which is often the case for executives or government employees whose contracts forbid them from commenting publicly to the press or otherwise.

No notification, reminder, or restriction

Neither Storify or Facebook offered any notification that the content was in any way restricted or private, and there is no way for users to opt out of their content being shared on Storify, even if implied via their ultra private settings on their Facebook account.

“I really like storify and it is so useful, especially with the Chrome app, for capturing content for my job and topics that matter in my work. It’s incredibly efficient,” said Pippert. But she notes that “End of day, you just have to be prepared to have some of your content used beyond in your little sphere. But the people using it have a responsibility too. What that is isn’t exactly clear in every case. We do all have to be responsible with content we put out through social media, even privately. My friend put out great content that reflected well on her. But she didn’t want her name out there publicly.”

“Storify enabled me to nearly bypass that, against her wishes,” Pippert said. “After we talked, I offered to remove her quote.”

What about private accounts on Twitter?

When a Storify app user clicks “Storify” next to a public Twitter user’s update as a means of adding that update to their Storify stream, the following appears:

And when a user attempts to Storify a private user’s update, it doesn’t offer any explanation or notice that you cannot do such a thing on a private user’s account, rather it turns the screen black like so:

Secret Facebook Group updates no longer secret

We noticed some major differences between how Storify reacts to private Twitter updates and Facebook updates, with users being able to read Facebook status updates in a Storify stream that would otherwise be private.

If your company has a Secret Facebook Group where you collaborate, your prayer group has a Private Facebook Group where you share personal intentions, or your friends have a Secret Facebook Group to talk about their abusive husbands, all of that is private within Facebook, but Storify grabs the information, and it becomes a Storify update with all of the attached data.

Take note that the embedded status update above has actually been deleted from Facebook, yet you can still see it on Storify. That is troubling. Here is a screenshot in the event someone at either company tweaks something and it disappears.

It’s time to look at the connection between Storify and Facebook

While there is not likely any malice by Storify here, or even Facebook in how they structure data differently than Twitter, the ability to inadvertently share private information is all too easy with Storify, and Facebook, who is famous for keeping data on their servers even after users delete photos and the like. It’s not in Facebook’s interest to get rid of any data points, as their bread and butter is ad dollars based on aggregated data, and it is not in Storify’s interest to get rid of data points, as they paint an accurate picture of a user’s status update, unfiltered.

Pippert concludes, “It might ultimately be a human problem to solve: capture content from others mindfully and use it thoughtfully, with good communication. Let others know you’re using the content and make sure you are clear to friends your preference about your content being redistributed.”

This is yet another reminder that anything you say anywhere on the web, private or not, is always subject to being shared via third party apps, screenshots, or good old fashioned copy and paste, so never say something online that you wouldn’t say in public, because there really is no such thing as privacy, which is sad and unacceptable, but true.

Regardless of human behavior, the connection between Twitter and Storify proves there are ways to actually protect private information, so it is clearly time to examine the connection between Facebook and Storify.

[pl_alertbox type=”info”]

More reading:

Storify Co-Founder implies nothing on Facebook is private

[/pl_alertbox]

Lani is the COO and News Director at The American Genius, has co-authored a book, co-founded BASHH, Austin Digital Jobs, Remote Digital Jobs, and is a seasoned business writer and editorialist with a penchant for the irreverent.

Scott Baradell

January 18, 2013 at 9:23 am

Excellent, Lani and Julie!

Julie Pippert

January 18, 2013 at 4:34 pm

Thanks, Scott.

AmyVernon

January 18, 2013 at 9:26 am

So glad you wrote about this and Julie tested it out. It once again shows that nothing you write online is truly private. As Julie rightfully pointed out, anyone could screenshot or otherwise share a post at any time, but it takes extra effort and would have to be done purposefully. But with the way the newsfeed is set up, you could easily Storify something that shows up in your newsfeed, not even realizing it’s not public.

I don’t blame Storify for this – they’re using the API Facebook gives them. Facebook needs to shore this up.

Erika Napoletano

January 18, 2013 at 9:39 am

Here, here, Amy. Another Facebook privacy issue — when will these be a thing of the past?

Abdallah Al-Hakim

January 18, 2013 at 12:31 pm

good question!

Julie Pippert

January 18, 2013 at 4:34 pm

Yeah, Facebook needs to recognize we’re going to want to use third party apps. I don’t want Storify blocked; I do want better collaboration tat lets it be in line with FB settings.

That’s exactly what happened — I easily Storified something from the newsfeed, not knowing it was not public.

I learned my lesson and try to be cautious, and I still use and am a fan of Storify. I just want my confidence back in respecting privacy settings.

Burt Herman

January 18, 2013 at 11:50 am

Thanks for the post and I very much agree with your conclusion — anything posted online in a way that others can see it could be copied, so you should think carefully what you write online. (Or even in an email, for that matter, that could also be easily copied).

This isn’t a technology issue as much as an etiquette issue. Now that everyone has the power to easily publish to the whole world, we all need to think about how to use that power.

Danny Brown

January 18, 2013 at 12:01 pm

Surely the etiquette should be for technology API’s to respect privacy settings and be unable to let users post private group updates, no?

Burt Herman

January 18, 2013 at 2:51 pm

It’s up to you to decide what to share online, and whether to trust the people who can see what you share.

Danny Brown

January 18, 2013 at 3:56 pm

Right. And when it’s to me, I choose to be part of a Facebook Group that’s private. So, it should now be up to any technology scraping feeds to recognize and respect private settings. Maybe something for you guys and Facebook to work out…

Burt Herman

January 18, 2013 at 4:20 pm

We don’t show anything to people who can’t see it already on Facebook. Only other people in that group can see it, so it’s up to you whether you trust them not to share what you post more widely.

Danny Brown

January 18, 2013 at 4:26 pm

You’re missing the point here, Burt – you are showing it to people who aren’t part of that private Facebook group, because you’re allowing these posts to be shown in a public Storify stream. I trust the people I’m part of a private group with – i don’t trust technology that ignores privacy settings who say “Don’t blame us if we post private stuff because someone in the group shared it.”

API’s can recognize privacy settings (why do you think social scoring tools primarily have to use public Twitter feeds for their scores versus private conversations and communities?). It’s easy to shift blame, it’s less easy to do the right thing and build technology that filters private settings and blocks sharing. But the reward for any companies doing this is more than worth the effort.

Julie Pippert

January 18, 2013 at 4:29 pm

Not that simple IMHO. We get used to Facebook restricting us from sharing private content. You can trust people and trust privacy, yet accidentally or innocently share. I learned a lesson the hard way. There’s a point to that.

Julie Pippert

January 18, 2013 at 4:26 pm

That’s a great point, Danny! The tools do need to respect the privacy settings. We can use caution–such as choosing words wisely, setting privacy, being in private groups, etc. But as in this article, even a really good statement that reflected well on the person was not okay with her to share. She shared it in perceived privacy and public share could have negatively affected her job. Not because she said anything wrong, but because she was not able to make a public statement.

Ike Pigott

January 18, 2013 at 9:43 pm

I would enjoy Storify so much more if it had more privacy options of its own.

For example, it’s a great tool for curating a cross-platform, extended conversation. But what if I want to share that compilation with a limited group? Storify has no “Unlisted” option, like YouTube and Posterous have to great effect.

Until it has that feature, I can’t afford to use it.

Nick

January 22, 2013 at 11:58 am

Is this news? A friend can publish your content with storify or they can take screenshot of your post. Where is the difference?

christof_ff

January 23, 2013 at 5:45 am

I don’t get what the problem is – they could just as easily take a screenshot, or publish private printed correspondence.

Surely the lesson is don’t trust you innermost thoughts with stupid people who are likely to share it with the world??

Edward Cullen

March 8, 2013 at 12:35 am

Nice post. I am fully agree and satisfied with your conclusion.

Pingback: Your Private Facebook Posts Can Be Publicly Shared Through Storify | Live Shares Daily | Sharing Updated News Daily