Artificial intelligence (AI) is racist. Okay, fine, not all AI are racist (#NotAllAI), but they do have a tendency towards biases. Just look at Microsoft’s chatbot, Tay, which managed to start spewing hateful commentary in under 24 hours. Now, a chatbot isn’t necessarily a big deal, but for better or worse, AI is being used in increasingly crucial ways.

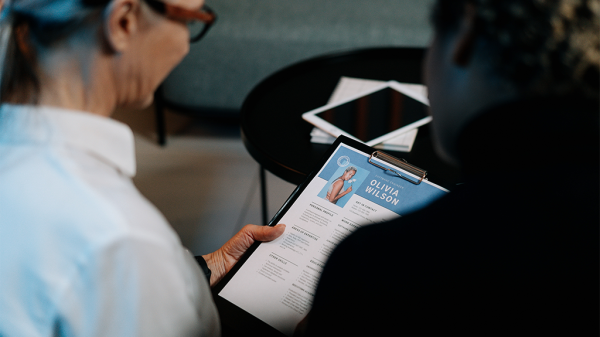

A biased AI could, for instance, change how you are policed, whether or not you get a job, or your medical treatment.

In an attempt to understand and regulate these systems, New York City created a task force called the Automated Decision Systems (ADS) Task Force. There’s just one problem: this group has been a total disaster.

When it was formed in May of 2018, the outlook was hopeful. ADS was comprised of city council members and industry professionals in order to inspect the city’s use of AI and hopefully come to meaningful calls for action. Unfortunately, the task force was plagued with troubles since the beginning.

For example, although ADS was created to examine the automated systems New York City is using, they weren’t granted access. Albert Cahn, one of the members of the task force, said that although the administration had all the data they needed, ADS was not given any information.

Sounds frustrating, right? One potential reason for this massive hiccup is the fact the administration was relying on third party systems which were sold by companies looking to make a profit. In this case, it makes sense that a company would like to avoid being scrutinized: it could easily lead to greater regulation or at the very least, a broader understanding of how their systems really worked.

Another overarching problem was the struggle to define artificial intelligence at all. Some automated systems do rely on complex machine learning, but others are far simpler. What counts as an automated system worth examining? The verdict is still out.

In the big scheme of things, AI tech is still in its infancy. We’re just starting to grasp what it’s currently capable of, much less what it could be capable of accomplishing. To add to the complications, technology is evolving at a break-neck speed. What we want to regulate now might be entirely obsolete in ten years.

Then again, it might not. Machines might be fast to change, but their human creators? Less so. Left unchecked, it’s debatable about whether or not creators will work to remove biases.

NYC’s task force might have failed – their concluding write-up was far from ideal – but the creation of this group signals a growing demand for a closer look at the technology making life-changing decisions. The question now? Who is best suited for this task.

Brittany is a Staff Writer for The American Genius with a Master's in Media Studies under her belt. When she's not writing or analyzing the educational potential of video games, she's probably baking.

Pingback: AI cameras could cut down traffic deaths, but there may be flaws

Pingback: AI technology is using facial recognition to hire the "right" people